It was not a flashy livestream. No press confetti. Not even a well-timed leak from a “source close to the matter.”

But make no mistake OpenAI’s gpt-oss launch was a thunderclap in a clear sky.

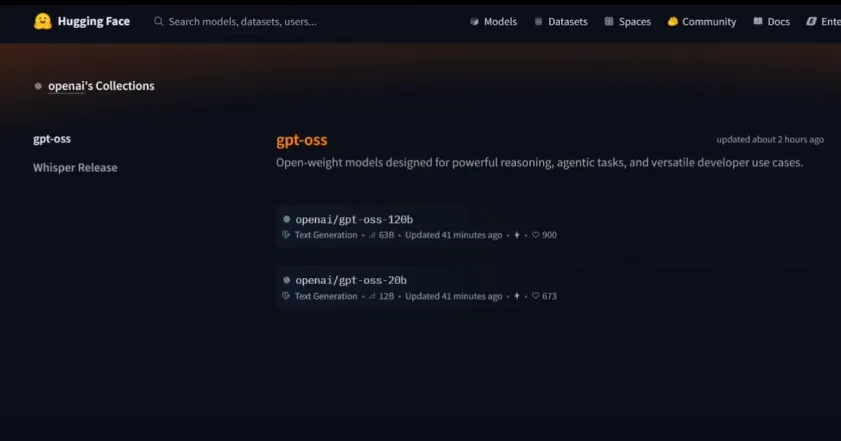

If you blinked, you might have missed it. A Github repo went live. A terse, almost cryptic blog post followed. And just like that, gpt-oss OpenAI’s first truly open-weight large language model series entered the world, grinning like it had nothing to prove. But it does. Because despite its name, gpt-oss is not just another open-source model For example: “Both gpt-oss-120b and gpt-oss-20b were released with open weights under the flexible Apache 2.0 license, and they deliver a level of performance that challenges proprietary models

Why gpt-oss Just Dropped, and Why It Matters

That’s the question keeping AI Twitter busy, and Slack backchannels even busier.

See, OpenAI has never been particularly… generous with its model weights. Up until now, it played the “safety” card—probably with some sincerity, definitely with some self-interest. But suddenly, here it is: gpt-oss-20b, and its much larger, much louder sibling, gpt-oss-120b—both released with open weights. Now, “open-weight” does not mean “do whatever you want.” These are not totally free-roaming agents. But still, we are talking about foundation models with state-of-the-art reasoning capabilities, made available for real-world tinkering. That’s a seismic shift, not just a product update.

My theory? Pressure. Simple as that.

Meta’s LLaMA family made “open-ish” the new cool. Mistral’s models are lean and shockingly clever. And then there’s the rogue’s gallery of high-performers cobbled together by the open-source community—Mixtral, Dolphin, OpenHermes, etc getting uncomfortably good.

OpenAI needed to reassert dominance in a space it helped spark but quickly distanced itself from. And gpt-oss is its answer.

What are the gpt‑oss Key Features for Developers

Let’s talk brass tacks. There are two core releases (so far):

Local hosting + transparency = control

Need full control? Run gpt‑oss‑120b offline, behind firewalls. Want lightweight? gpt‑oss‑20b on your laptop. No API calls. No cloud vendor lock‑in.

And chain‑of‑thought reasoning is supported. OpenAI shows intermediate reasoning steps so developers can audit and track logic (important for safety).

Safety testing baked‑in

OpenAI delayed the launch twice in July to run extra safety checks. They even created adversarial “bad actor” versions internally to push misuse boundaries fortunately, their risk model showed low capability in malicious tasks. External experts had a look too. So while open‑weight can feel nettlesome, OpenAI insisted on rigor.

Broad availability

These models landed on major platforms simultaneously: Hugging Face, Azure, AWS Bedrock, SageMaker JumpStart—making deployment nearly seamless everywhere. AWS says gpt‑oss‑120b is up to three times more price‑efficient than DeepSeek‑R1, Gemini, or even o4.

Benchmarks Are One Thing But How Does gpt-oss Feel?

Ah, the gpt-oss benchmarks. They are, predictably, impressive.

- MMLU: >80% on the 120B variant

- GSM8K: Strong, with a few “hmm” moments in long-chain math

- ARC-Challenge: Competitive with Claude 2.1

- HumanEval: Nearly identical to GPT-4 Turbo in raw coding tasks

But forget the numbers for a second. Because the real magic with gpt-oss—and I do not use that word lightly—is in how stable it feels under cognitive load.

| Model (open‑weight) | Approx. Benchmark | Typical Hardware | Use Case |

| gpt‑oss‑120b | Matches o4‑mini | 80 GB GPU / high laptop | High‑end reasoning, agentic workflows |

| gpt‑oss‑20b | Near o3‑mini | ~16 GB desktop/laptop | On‑device coding, math, research |

Wanted better? Sure, DeepSeek and Meta’s Llama 4 made waves earlier in 2025—but gpt‑oss is OpenAI’s homegrown, battle‑tested open‑weight offering. It’s not fully open‑source (no training data), but with Apache‑2 licensing, developers can fine‑tune and adapt.

A New Game: OpenAI’s Hybrid Strategy

There is a subtle strategic shift here. OpenAI is no longer just the high priest of proprietary models. It’s signaling a hybrid future.

Do you want ChatGPT? Cooluse the API, pay by the token.

Do you want full model access, to build your own vertical tools, run offline, or audit deeply? Here—gpt-oss is yours.

This dual-track model serves multiple masters: policymakers asking for transparency, researchers demanding reproducibility, and enterprises who are wary of vendor lock-in.

But it is also a message to the broader open-source AI community:

“We see you. And we are not above joining your game. But we are going to do it better.” A little smug? Maybe. Deserved? Hard to argue.

What’s Next for gpt-oss?

- More community forks with tasks‑specific fine‑tuning.

- Benchmark comparisons, via independent evaluation across OSS‑Bench style tools (e.g. OSS‑Bench generating live coding tasks).

- Potential future releases—a gpt‑oss‑larger, or multimodal version? Altman hinted at returning to openness long ago. We may see gpt‑oss‑5XX someday.

Final Thought: A Game-Changer or a Half-Step?

What’s Real: A Quick Use‑Case Scene I Imagined Picture this: you’re a small biotech startup. Sensitive patient work. APIs verboten. You download gpt‑oss‑120b, deploy it in‑house. It parses clinical trial logs, drafts protocols, even writes code to automate analysis steps. Chain‑of‑thought gives auditability. You’re not just being lean—you’re safe. That, friends, is where chatgpt‑oss aimed to land.

Call me sentimental, but gpt-oss feels like more than just code weights and parameter counts. It is a small but meaningful recalibration of the power structure in AI. OpenAI, the company many accused of abandoning openness, just threw a ladder back down.

Not to be cute about it, but It is open. It is sharp. And it might be one of the most important things OpenAI has released since the original GPT-3.The question now is: What will we build with it?

What do you think? Drop your take in the comments. How are you planning to try gpt‑oss? Or where do you see potential trouble, or opportunity?